Picture this: you’re home after another long day of work. You’re looking forward to sitting back, relaxing and spending a few well-deserved hours on YouTube. You find a video you want to watch, cue it up and notice — almost immediately — that something isn’t right. Instead of crystal-clear video, you’re seeing something quite different: frame after frame of grainy, sub-par footage.

If you’re unfamiliar with the mechanics of video production, you don’t know what the problem is, only that you’ve encountered it before.

If, on the other hand, you’re a filmmaker, post-producer, or computer vision expert, you know exactly what you’re looking at. You also know that nothing ruins video quite like noise. It’s unpleasant to the eye and distracting to viewers. And not only does it ruin the viewing experience, it also takes a lot of unnecessary bits to compress, encode and transmit, which is bad for the bottom line.

Understanding its causes and how best to eliminate it is key to creating immersive professional-quality video experiences.

What is image noise and what causes it?

In non-technical terms, image noise consists of random variations of brightness or color information in the shot you are trying to capture. It is most likely to show up in low-light conditions and/or when shooting with small sensors. The mechanics are pretty simple: the more you try to compensate for low-light settings with gain and ISO, the more noise is produced as a result.

Credit: Jaime VD.

There are two main types of camera sensors: CCD (charge-coupled devices) and CMOS (active-pixel sensors). Although the adoption of CMOS in both consumer and professional equipment is accelerating, most digital cameras today still use the more traditional CCD sensors. This article focuses primarily on them.

CCD sensors consist of a network of capacitors, which are analogies to pixels. When an image is projected onto the lens, each capacitor accumulates an electric charge proportional to the light intensity at that location.

These charges are then compiled and dumped into a charge capacitor, which converts them into a series of voltages. The voltages, in turn, are sampled, digitized, stored and processed to give rise to the final image.

Noise is generated at every stage of this process. Certain situations lead to more noise than others. The most common root causes are listed below:

- Low-light settings: In well-lit environments, the light is enough to overpower the undesirable data generated by sensor noise. Low-light conditions, on the other hand, can result in more noise data being produced than light data, which leads to very noticeable levels of noise.

- High sensitivity modes: In digital photography, high sensitivity is a result of the CCD amplifying the data it measures. But this amplification doesn’t discriminate between light data and the noise data — meaning both are amplified — which is why these modes often result in more noticeable noise.

- Small sensors: Think of the CCD as a surface area of photon-sensitive elements. The smaller these elements are — either because you want a high resolution or you require the CCD to be small (as in mobile devices or webcams, for example) — the fewer photons will be accumulated per pixel during an exposure. Fewer photons per pixel mean more noise per pixel since the signal needs to be amplified more to read out the charge.

- Inference: Sometimes, signals originating outside the camera (such as from cosmic radiation or strong radio signals) can interfere with and affect the amount of noise in the final shot.

Different kinds of noise

From left to right: Salt and pepper (Wikimedia), Shot (Mdf), Gaussian (Anton), Inference (Max221), Film Grain (bottom right. Tristan Bowersox)

All the factors mentioned above lead to different kinds of noise, each of which has particular mathematical and visual characteristics used to identify it.

- Gaussian Noise: This arises during acquisition. The sensor or CCD generates inherent noise due to the level of illumination and its own temperature. In addition to this, the electronic circuits connected to the sensor also inject their own level of electronic circuit noise.

- Shot Noise: Also known as photon shot noise, this is caused due to a variation in the number of photons sensed by the CCD at any given exposure level. It is associated with the quantum processes inherent in both the generation of the photons and the conversion of those into electrons within the camera.

- Salt and pepper noise: Immediately recognizable by dark pixels in bright areas and light pixels in dark areas. This type of noise is a result of converting videos from analog to digital or other errors in pixel interpretation, such as dead sensor elements.

- Quantization noise: In the context of image processing, quantization is achieved by compressing a range of values into a single integer value or a smaller representative sample set. Quantization noise is typically caused by rounding errors during that process.

- Film grain: This is a random physical texture produced on footage shot using film stock, which occurs because processed photographic celluloid contains small metallic silver particles. It doesn’t naturally occur on digital footage for obvious reasons, but can be added in post-processing to achieve the iconic look of footage shot on film stock. Pixop’s film grain filter does just that.

- Anisotropic noise: This occurs when the sensor readout is sampled or quantized. It causes finer details to blend together, creates patterns that aren’t there, and/or interprets straight lines as jagged.

- Periodic noise: This is a kind of interference noise, meaning it originates outside the camera. It occurs when natural or man-made signals interfere with the recorded signal and typically manifests as something that looks like a repeating pattern that has been overlaid on the original image.

What is denoising?

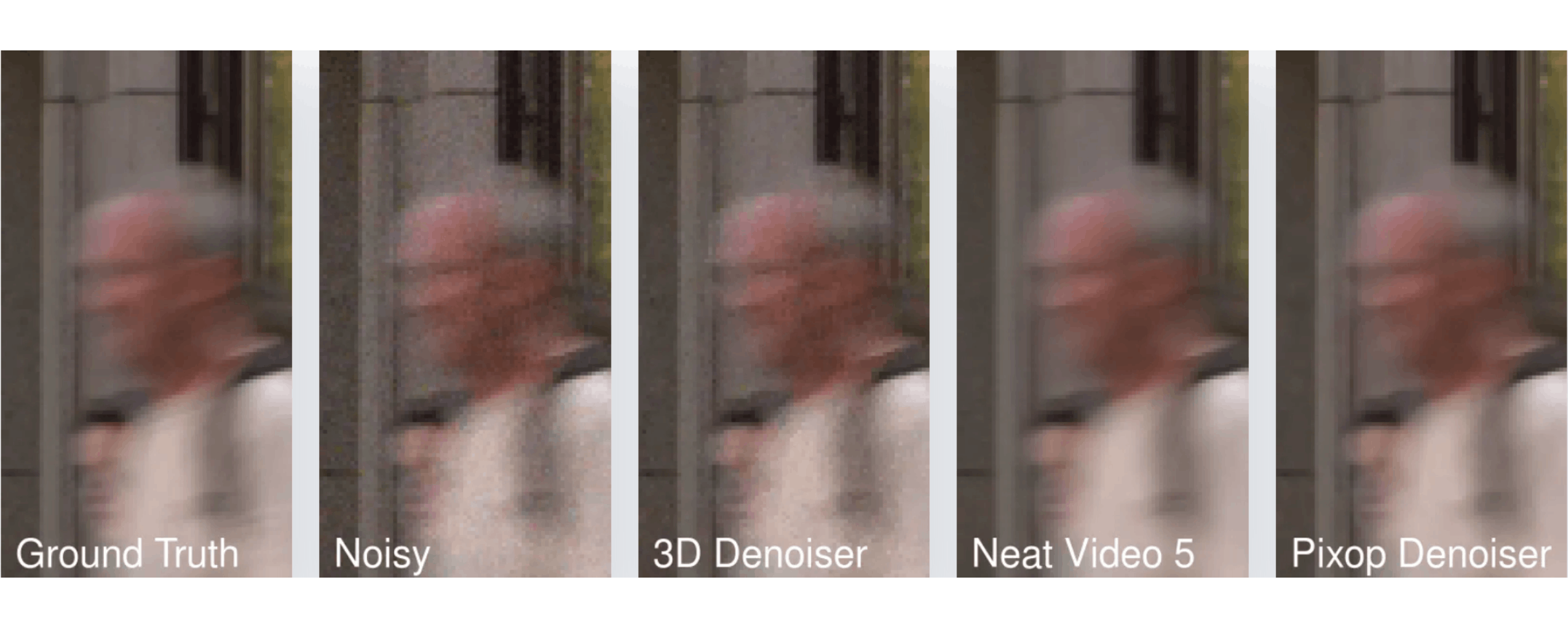

Noisy frame denoised using popular denoising software programs.

Given that too much noise does not make for pleasant viewing, denoising in image and video production is used as a technique to take footage from grainy to crystal-clear to improve a viewer’s quality of experience. There are several techniques, each applying different mathematical and statistical models. They are broadly classified under classic denoising methods (spatial domain methods), transform techniques and ones that rely on machine learning and CNNs (convolutional neural networks).

In the case of video, the desired signal we want to extract and preserve is the photons that have accumulated in the pixels of a CCD. The noise we want to eliminate is the undesired fluctuations in color and luminescence caused by one or more of the reasons presented previously, either within each frame or in between one frame and the next.

A good noise reduction technique is one that suppresses noise effectively in uniform regions; preserves edges and other image characteristics, such as fine details and textures; and provides a visually natural experience.

However, given the fact that noise, edges and textures are all high-frequency elements, simply applying filters to eliminate these frequencies removes noise as well as desirable aspects that need to be preserved. Some major challenges include:

- Ensuring that flat areas remain smooth

- Ensuring that edges are protected without blurring

- Ensuring that textures are preserved

- Ensuring that new artifacts are not generated

Classic Denoising

Spatial domain methods aim to remove noise by calculating the grey value of each pixel based on the correlation between pixels and image patches in the original image. The grey value of a pixel is defined as its brightness, with the minimum value being 0 and the maximum value being dependent on the digitization depth of the image. For an 8-bit depth, this maximum value is 255.

Spatial domain methods can further be divided into spatial domain filtering and variational denoising methods.

Spatial domain filtering is based on the principle that noise occupies a higher region of the frequency spectrum. This frequency can then be isolated and subtracted using filters like mean filtering, Wiener filtering, or weighted median filtering. However, as discussed previously, this method has the disadvantage of possibly blurring and softening other high-frequency elements like edges and textures, which is an undesirable outcome.

Variational denoising methods, on the other hand, are based on the principle that signals with excessive and possibly spurious detail (i.e. noise) have high total variation, which is related to but different than frequency. What follows is that reducing the total variation — subject to it being a close match to the original signal — would tone down excessive noise while preserving details like edges.

Transform techniques

In contrast with spatial methods, where denoising is performed on the actual image, transform techniques, as the name suggests, first ‘translate’ the given image into another domain by performing a set of mathematical operations known as transformations.

They then perform denoising on the transformed image by accounting for the differences in the actual image versus the undesirable noise. Transform techniques are categorized into data-adaptive and non-data adaptive, based on the kind of transformation performed on the image.

The disadvantage of these techniques is that they generally tend to be more demanding in terms of compute power, while spatial domain techniques are faster and more straightforward. On the other hand, transform techniques can be extremely effective in some cases, so it's a case of the classic speed versus visual quality trade-off.

Machine-learning techniques

While the denoising methods presented above are good at removing noise from images and video, they are time-consuming and suffer from drawbacks such as computational expense. What this means to someone just looking to denoise footage, without having the benefit of the technical knowledge behind these techniques, is that they often require extensive manual tweaking on software programs with steep learning curves. Deep learning methodologies (often involving convolutional neural networks) were created to address this.

Machine learning for denoising employs the use of sophisticated algorithms that are trained on a set of thousands of images containing degraded-clean image pairs. The algorithms are then set to work to identify a mapping function, which is an unknown underlying function that is consistent in mapping inputs to outputs in the target domain and resulting in the dataset.

In extremely simple terms, what this means is that the algorithms are attempting to learn the relationship between the two sets of images. Once learned, they would be able to automatically apply it to similarly noisy images or videos to produce the denoised versions.

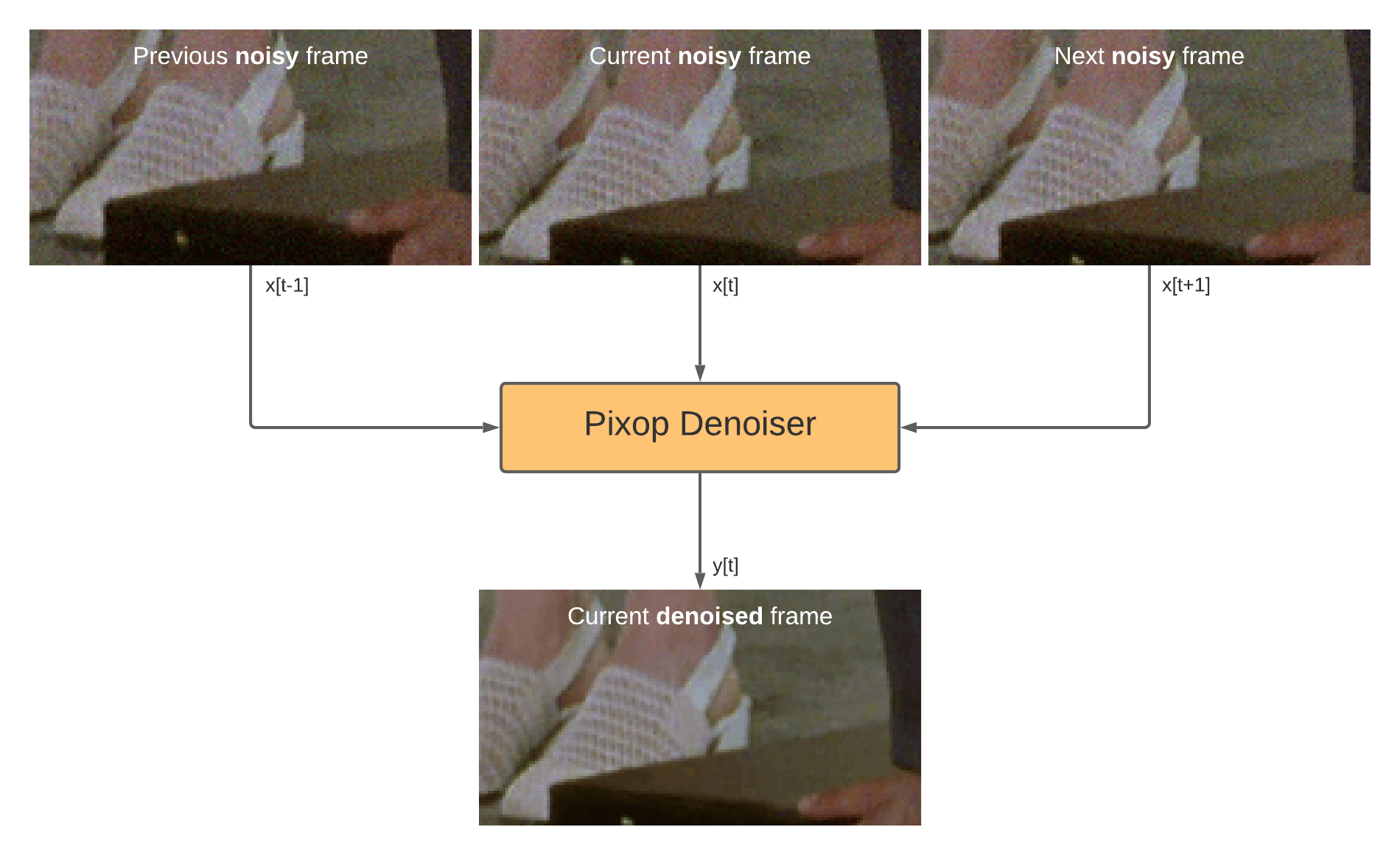

Pixop's Denoiser is based on this principle. Videos are processed frame-by-frame using three video frames (previous, current and next) as input. An enhanced frame is then produced via inference using our pre-trained neural network model. The diagram below is illustrative of the process:

The steps involved in denoising a frame using Pixop's denoise filter.

Why denoise, anyway?

Ever since our earliest ancestors were scratching stick figures into the sides of caves, our desire to record, process and preserve snapshots of our lives has driven much of our creative output. It seems a fundamental part of what it means to be human. This is as true today as it was back then, with an ever-growing explosion in the number of digital images and videos captured every day.

But reality is noisy, and any information-recording endeavor is bound to run into issues with recording the wrong kind of information. We’re bombarded with signals every which way we turn: cosmic radiation beating down on us from space; bubbles of radioactive decay, like swamp gas, slowly permeating the air; the chaotic world of subatomic particles ping-ponging far out of sight of our powers of perception.

Picking out and preserving the desired information, in light of all of this, takes on epic proportions, with considerations far greater than aesthetics or the need to save on bandwidth.

Credit: Welcome Collections.

After all, humans have always had a desire to present the best version of ourselves and what we create to the world: papering over the cracks, smoothing rough-hewn edges, carefully curating and honing anything we deem suitable to record in perpetuity. And lately, the democratization of technology has given every one of us the opportunity to tell our story. It’s only fair we are given the tools to do it properly.

Denoising just happens to be one of those tools. It’s something that has taken experts years to perfect, something that has applications in cases ranging from medical imaging to astronomy to that video you shot recently that didn’t turn out quite the way you were hoping. And while denoising will never be completely ‘solved’, given its nature as an under-determined problem (meaning that there are an infinite number of solutions), new and better denoising techniques are being developed by the scientific community and researchers every year.

The rise of CNN-powered methodologies, machine learning and AI means that denoising techniques and software programs are available to more people than ever before. From big media conglomerates looking to denoise decades worth of film, to amateur hobbyists with a few videos to perfect, there's a solution for everyone.

So why not take advantage? You — and your stories — deserve nothing less.